The only thing worse than the “AI revolution”, is no “AI revolution.”

An exploration into fading hopes for "AI."

“I who have been called wise, I who solved the famous riddle, am proved a fool.”

-Sophocles

When I first began drafting this piece on the weakening economic prospects of artificial intelligence, I set it aside to write an urgent stack on an impending cataclysm that was revealed to me in a vision while meditating in my giant copper pyramid... (I kid, but not really). In the intervening weeks, however, much of what I had been outlining has begun to surface in the news cycle and twitter sphere. At the time of writing, my concerns felt somewhat transgressive and contrarian. Yet this perspective is now moving closer to the mainstream, nevertheless I still thought it was a prescient topic to write about which is that: the likelihood is increasing that the paradigm-shifting potential of AI will prove far less pronounced than many have anticipated, and that this may have severe consequences.

The core issue is that the current capabilities and future potential of AI, particularly economically in the form of productivity growth, may be substantially overstated (However I am bracketing out of this stack, other externalities of AI adoption like it’s impact on universities which is explored in depth by

’s great stack, or it’s impact on academia and how we use our time by ). Governments, firms, and investors are planning around scenarios of rapid and transformative impact. But in the short term, and plausibly extending into the medium term such expectations are appearing more difficult to justify. More troubling still, today’s dominant frameworks, especially large language models (LLMs) and large reasoning models (LRMs), face deep structural bottlenecks that are unlikely to be resolved quickly. These constraints not only dampen near-term productivity gains but may also generate significant second- and third-order effects with potentially serious and destabilizing consequences.“The tsunami of a lifetime is coming…”

The hype surrounding artificial intelligence has been extraordinary. Narratives range from dire warnings that large language models (LLMs) herald the imminent arrival of artificial general intelligence (AGI), to people convinced that the chatbot they are interacting with is in some sense “real,” to forecasts of a looming tsunami of unemployment as machines displace human labor (Questions around “consciousness” of AI are tackled expertly in

’s stack here). Much of this fear-mongering about AI’s “power,” I would argue, is not a reflection of reality but rather marketing. In retrospect, many of these claims are likely to appear not just overstated but laughable in the same way we look back on the Y2K scare.At the center of this wave of expectation is the claim that a jobs revolution is imminent and that artificial intelligence will soon restructure labor markets, rendering vast categories of work obsolete. The reality, however, looks very different. To date, the number of jobs lost directly to AI remains relatively small, and many of the layoffs attributed to “AI disruption” appear to be part of a broader pattern of stagnation and restructuring within the private sector, particularly in technology firms.

To delve into this more directly, I posed the question to ChatGPT itself: when might it begin displacing the kinds of lower-skilled roles most often cited such as clerical workers, administrative assistants, and similar occupations? The model asserted that it is already “basically capable” of performing such tasks, but acknowledged that “several practical obstacles remain” before it could serve as a complete replacement. What are these “practical obstacles?” To investigate this takes us to the fundamental issue of AI at this present moment, which is beginning to be spoken about in depth- reliability...

Perceptions of AI:

A recent and widely discussed paper recently published by Apple has sparked renewed discussion about the true capabilities and limitations of artificial intelligence. In this study, Apple researchers rigorously evaluated two leading paradigms of AI systems: Large Language Models (LLMs), which generate direct responses, and Large Reasoning Models (LRMs), which incorporate structured reasoning steps before producing an answer. The findings appear to be worrying for AI boosters, while LRMs performed better on moderately complex tasks compared to LLMs, both approaches struggled when confronted with problems at either extreme that is, those that were very simple or those that were highly complex. In such cases, the models often produced inaccurate, incomplete, or entirely failed solutions. This pattern highlights fundamental bottlenecks in our current understanding of AI reasoning, suggesting that raw scale and reasoning scaffolding alone may not be sufficient to achieve robust, general problem-solving.

This brings us to the broader issue at the heart of today’s AI discourse: the gap between AI’s actual capabilities and its public perception. The promises surrounding AI are often amplified far beyond what the technology can currently deliver. At the same time, darker narratives of AI as an existential threat, capable of rendering humanity obsolete, saturate popular media as alluded to earlier, resulting is a climate of greatly exaggerated expectations.

Recent research underscores this disconnect. A study by the Association for the Advancement of Artificial Intelligence found that 79% of AI experts believe the public significantly overestimates AI’s capabilities. This makes sense when one considers the relentless messaging: on one hand, marketing hype from industry promising transformative productivity; on the other, apocalyptic warnings of machines judging humans “inefficient” and replacing us entirely. Both narratives distort reality heavily, making it harder to ground discussions of AI in evidence, nuance, and practical limits.

Ultimately, the public’s tendency to overestimate AI’s capabilities is not the most pressing concern on its own. The deeper issue lies in the fact that, according to the same report, 61% of AI experts believe that the problem of factual reliability is unlikely to be solved in the near future. This strikes at the core of how AI can realistically be deployed. The article itself makes the case that AI should be regarded as an assistant rather than a replacement for human labor. However, this creates a significant conundrum: if AI outputs still require careful human oversight and verification, then the promised gains in efficiency are far less dramatic than many anticipate. Instead of eliminating vast amounts of human effort, AI in its current state may only deliver incremental productivity boosts, not the seismic transformation that dominates public imagination.

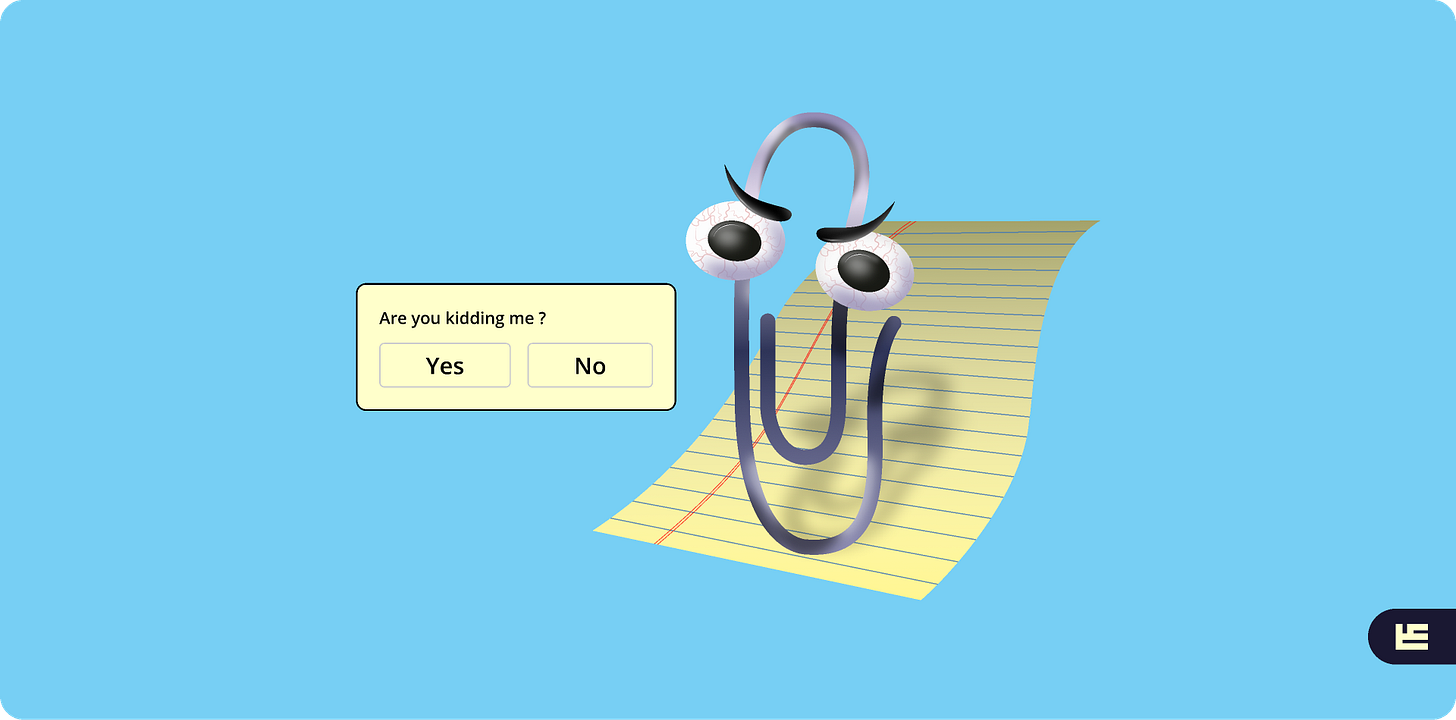

Vast sums of money are being funneled into AI development and infrastructure. But, if reliability bottlenecks persist as a majority of experts claim, the technology risks functioning more like a $4 trillion Clippy, than a revolutionising industrial tool.

Why This Is a Big Problem

There are two dimensions to why this issue is so serious the first concerning, and the second is also concerning, but probably more so.

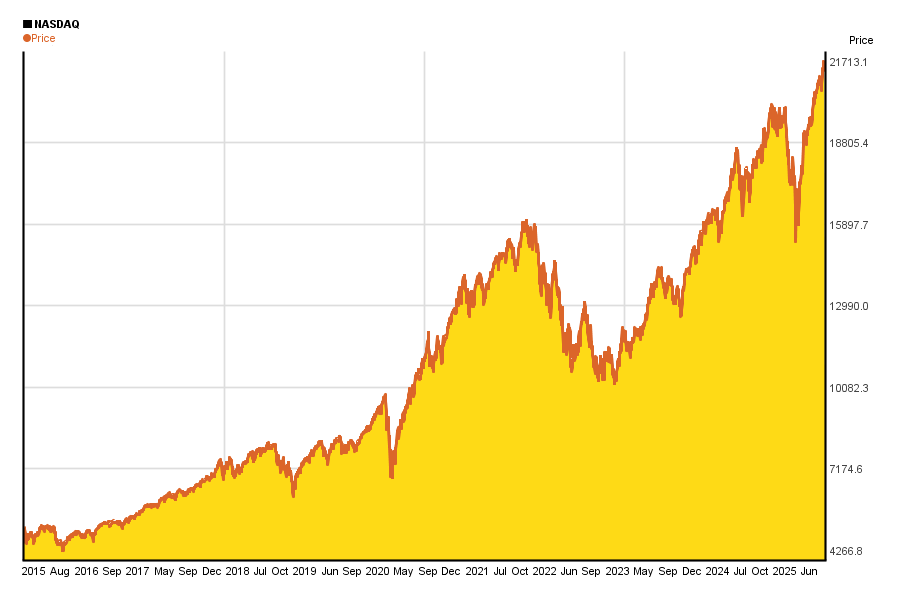

The first reason is that already some commentators have begun drawing parallels between today’s AI boom and the dot-com bubble of the 1990s. Back then, immense amounts of capital poured into the rapidly growing internet sector. The enthusiasm and much of the investment proved premature, and when expectations could not be met, the bubble burst triggering a painful market crash that devastated portfolios and livelihoods. When examining today’s markets, particularly the NASDAQ, which is heavily weighted toward technology companies the trajectory seems similar, showing a stratospheric rise in valuations. At the same time, sobering signals are beginning to surface: research papers like Apple’s recent one cited earlier are highlighting bottlenecks in AI’s reasoning ability, and even figures such as Sam Altman, once among the loudest evangelists of AI’s revolutionary potential, have tempered their rhetoric. If this recalibration continues and the public’s inflated perception of AI is corrected abruptly, we may well witness an AI bubble collapse that mirrors the dot-com crash.

However, our economic context today is fundamentally different from the 1990s. Since the 2008 financial crisis, central banks and governments have relied heavily on monetary interventions: low interest rates, quantitative easing i.e.. “funny money” to sustain growth and shield markets from shocks. Thus, we could avoid a sharp crash, but at the cost of prolonging distortions in capital allocation, and worsening living conditions. Investment would continue to flow disproportionately into AI, not because of proven productivity gains, but because of sustained hype and artificial financial cushioning.

The second problem is far more serious and has direct implications for the stability of Western economies. As I have argued previously, these economies are starved of productivity growth, a long-standing structural issue that predates the AI boom. Over the past two decades, productivity gains in advanced economies have stagnated despite some big advances in digital technology, and governments are now scrambling for ways to reverse this trend. Mounting public deficits from spending programs such as rising welfare costs, and ongoing geopolitical pressures have left many Western states in precarious fiscal positions. The UK, for example, has been repeatedly warned by the Office for Budget Responsibility that without sustained productivity growth, the country faces an unsustainable debt path. Similarly, in the United States, the Congressional Budget Office projects federal debt to exceed 181% of GDP by 2053, much of it contingent on assumed efficiency and growth gains. In both cases, AI-driven productivity growth is explicitly factored into future fiscal models as a potential savior over the next 5–10 years.

This reliance is extremely risky if AI cannot deliver on its transformative promises. As explored earlier, if AI proves to be less of a revolutionary force and more of a narrow assistant requiring ongoing human oversight, the productivity boost will be incremental at best. Recent evidence already points in this direction: McKinsey’s 2023 report suggested that generative AI might add 0.2–3.3 percentage points to annual productivity growth, but only under “broad and effective adoption scenarios” a best-case scenario that many experts view as unlikely given current factual reliability and oversight problems. If governments have baked ambitious AI productivity gains into their debt forecasts, and those gains fail to materialise, the consequences could be severe. Countries like the UK, Italy, or even Japan which are already carrying historic debt burdens could find themselves unable to stabilize finances, potentially leading to the “old Greece 2010 treatment.”

has also commented similarly on this.In short, this is not simply a case of public perception being inflated; it is a case of entire governments potentially mispricing the future. Just as the public overestimates what AI can do, policymakers may have also drank the same Kool-Aid in their long-term economic planning. And unlike individual hype cycles, this overestimation could leave states with few levers to correct course, further exacerbating the economic fragility of the West.

In conclusion, AI systems such as LLMs are somewhat impressive and useful tools. Yet their capabilities are limited, and the sweeping promises surrounding their future potential appear massively overstated in the public imagination. At a time when Western economies face severe structural pressures and mounting fiscal challenges, the danger lies in policymakers and markets overinvesting in AI as a supposed economic savior. If these expectations fail to materialize, the consequences could be very serious...

Well said. I dearly wish I thought our elites had a backup plan but... I don't think they have a backup plan.

I wouldn’t necessarily expect gains in productivity due to IT improvements. Productivity growth had been mediocre since the 1970s. I understand the term is Solow’s Paradox.

We are seeing from governments more and more desperation moves. Here in Canada we’ve seen governments banking on electric cars and pledging $50 billion in subsidies to various industrialists, some of whom have already gone bust.

One important point is that it takes electricity to run AI and electric cars, and we just don’t have it. People are acting like electricity is cheap and easy to produce. It isn’t. So these ideas are doomed to begin with.